Climate policy—modelling vs. market-based measures

This is the final installment of a three-part essay series on climate science and policy in Canada and around the world.

In part one of this series, we observed that the current globally dominant climate policy framework of arbitrarily picking an upper bound of acceptable global warming (1.5 degrees Celsius), which is expected to develop over an equally arbitrary period of time (through 2100) is based on speculative computer modelling that exaggerates the climate’s sensitivity to greenhouse gases (GHG), and is contradicted by real-world evidence of climate sensitivity gathered by satellite measurements of the climate over the last 20 to 30 years.

In part two, we observed that economic analysis suggests continuing to chase today’s model-based targets, timelines and policy proposals (i.e. net-zero carbon by 2050) will do far more harm than good, in terms of ensuring human goals such as functioning efficient economies. In particular, we showed that using the climate-economic model of recent Nobel laureate William Nordhaus, the popular goal of limiting global warming to 1.5°C is so aggressive that it would actually be worse than if governments did nothing to restrict GHG emissions.

In this final entry in this blog series, we ask the question: If we aren’t to work backwards from models of the future, how should we address the risks of climate change?

It's a question I’ve studied since 1998. Surprisingly, despite the overwhelming expansion of climate change science and policy research, little has changed from that time in terms of weighing whether a policy based on achieving speculative future targets, timelines and specific achievement approaches is better or worse than a policy of continuously measurable management of GHG emissions and damages via pricing-based policies.

To understand what those “continuous measurable improvement” policies would look like, it helps to examine how this is done, on a routine basis, for the provision of goods and services critical to present-day health, safety and environmental protection. Such goods and services would include medical technologies, chemicals in consumer goods, food sanitation testing and other similar risk-management activities we use to address thousands of potential life-and-death risks every day in developed economies. And for this, we need to introduce the useful idea of “Quality Control and Quality Assurance” as it’s used today.

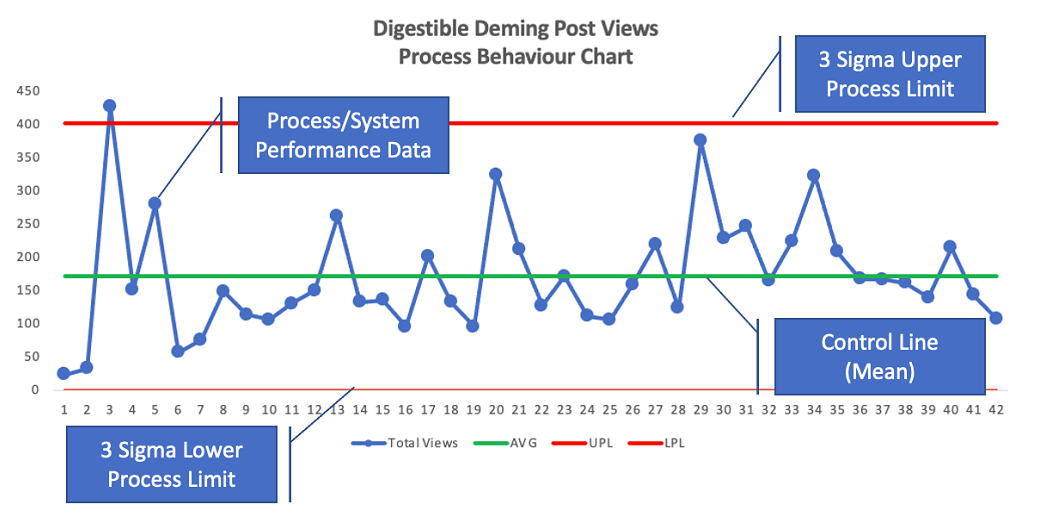

This essay, by Toronto-based consultant Christopher Chapman, offers a good introduction to what are generally referred to as “quality control charts,” as pioneered by Dr. William Deming who’s considered the “father of quality management.” The figure below is an example (from Chapman) of what a basic quality control or “process behaviour chart” looks like.

In this chart, the green bar represents the desired control level of meeting a standard. In the case of climate change, this target might be restricting GHG emissions to achieve a stated goal of limiting global warming to 1.5°C. The two red bars depict the range of possible variation around that target, happening over time. In this example, if the blue line was variations in the global average surface temperature, the upper red line would be the “OMG, we roast to death” line and the lower red line would be the “OMG, we freeze” to death line.

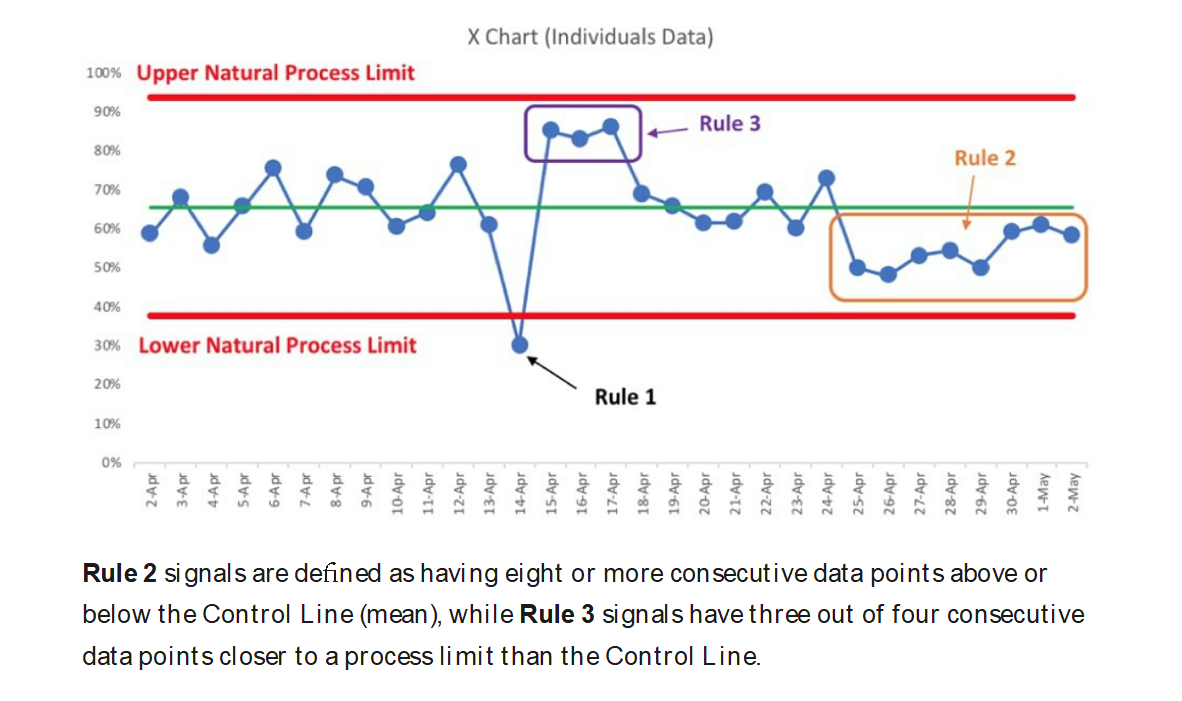

The value of this approach is one can, in real time, using verifiable measured data, chart a course forward to adjust to unacceptable deviations from the mean. As Chapman then observes, one can set reaction plans (or rules) in place for deviations too far from the mean.

As this second chart shows, different trigger points are possible in such a system to indicate when some change are needed. One violation (rule 1) might trigger only moderate scrutiny, three violations (rule 2) still more scrutiny, and 8 consecutive violations (rule 3) as a signal that significant change is needed.

With regard to climate policies, many economists and policy analysts have observed that while governmental control systems based on speculative modelling of the future have constantly failed to achieve either GHG control targets or meet mandated timelines, market-based measures such as the oft-discussed (but too rarely implemented) revenue-neutral carbon tax or GHG-emission pricing more broadly might be able to do so and have a history of successfully doing so in addressing environmental problems comparable to climate change (stratospheric ozone depletion and acid rain are two examples).

The reason that these latter approaches (taxes and pricing) are deemed superior to alternative command-and-control approaches is precisely because they would allow the kind of ongoing assessment of progress and refinement of control over time. Unfortunately, the way governments have generally chosen to set and administer carbon taxes (and other emission-pricing schemes) tends to disfavour any adjustments that do not move upward, negating the utility of the continuous refinement value of real-time control systems.

What would this look like for climate policy in practice?

- Replacing (not simply augmenting) existing GHG control methods based on predictive modelling with market-based price signals based on real-time achievement of the optimal “climate balance,” measured through price signals. This would require adapting current versions of carbon tax and pricing schemes to allow for real-time setting of emission prices that are tied to actual manifestations of warming rather than modelled scenarios of abstract social costs of carbon.

- Relying more on empirical measurement systems which give real-time feedback about how climate balance is being achieved, such as satellite temperature measurements and ambient GHG measurements rather than modelled values.

- Establishing simple, understandable, predictable “behaviours” to be triggered by unacceptable deviations from the golden-mean we are seeking to achieve with climate policy (i.e. raise-lower carbon price, raise-lower GHG emission caps/limits in accordance with deviation from the acceptable level of warming observed in real time).

Using pragmatic methods of measuring climate variability and impacts in real time, and making small incremental adjustments to emissions incentives via market-based interventions, may not be as dramatic a policy approach as aggressively attempting to address a speculative prediction of catastrophic climate change scheduled to occur sometime after 10 years by setting stringent concrete limits on GHG emissions today.

However, experience with society’s successful management of a myriad of risks, from small to large, suggests that using a market-driven, quality control-style system to manage climate risk would be much more likely to achieve a desired end, which is balancing the risks of climate change with human economic prosperity.

Author:

Subscribe to the Fraser Institute

Get the latest news from the Fraser Institute on the latest research studies, news and events.